(Governor J.B. Pritzker and Mayor Lori Lightfoot)

(Governor J.B. Pritzker and Mayor Lori Lightfoot)

NOTE: A version of this story is airing on NPR’s All Things Considered on December 15, 2020.

When we’re first learning to make music, most of us don’t worry about an A.I. stealing our flow. Maybe we should.

They say imitation is the sincerest for flattery. But when Jay-Z heard himself on the internet spitting iambic pentameter — Hamlet’s “To Be, or Not to Be” soliloquy, to be exact — “flattered” is hardly the word for how he responded. More like, furious. Because the voice wasn’t his. It wasn’t even human. It was produced by a well-trained computer speech synthesis program using artificial intelligence.

An anonymous YouTube artist named Vocal Synthesis has created a library of popular voices mismatched with unexpected famous texts, including George Bush reading “In Da Club” by 50 Cent, Barack Obama reading “Juicy” by Notorious B.I.G, and, yes, Jay-Z reading Hamlet (and Billy Joel’s “We Didn’t Start the Fire”). In popular culture terminology, these mashups are called deepfakes.

It’s All Fun And Games Until Jay-Z Gets Pissed

Jay-Z is not about having his voice used without permission, and his company Roc Nation LLC reportedly filed a takedown request to withdraw the videos from YouTube for copyright infringement. They were removed for a hot second but then re-released with a statement from YouTube saying the claims were “incomplete,” The Verge reports. We reached out to Roc Nation’s parent company, Live Nation, for comment and did not get a response.

So, did Jay-Z have a legal leg to stand on?

“There is the right of publicity, which is the right of a celebrity to be able to control how their image is commercialized,” said Patrice Perkins, an attorney with the Creative Genius law firm. Voice can count as part of your image, so Perkins found his response completely warranted. The implications are major, because anything Jay-Z does or doesn’t do can set a precedent for protecting and controlling how his, and other people’s, images are used going forward.

“Regardless of the law,” Perkins said, “I think the whole concept of A.I. in these deepfakes is so new that it’s hard to put a policy around it that would be a one-size-fits-all solution.”

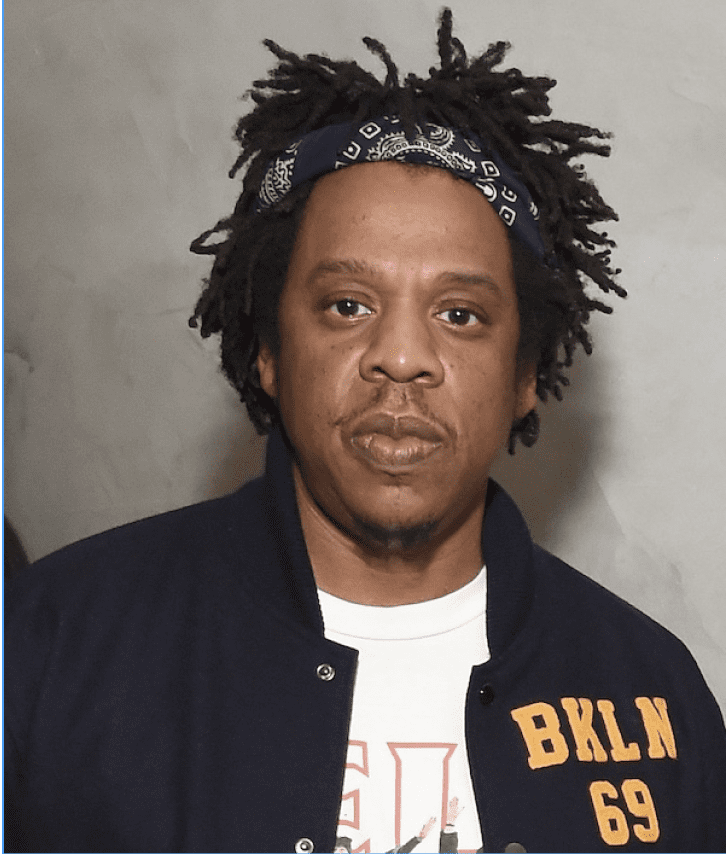

Jay-Z attends The Broad Museum celebration for the opening of Soul Of A Nation: Art in the Age of Black Power 1963-1983 Art Exhibition (Photo: Michael Kovac/Getty Images for Land Rover)

Vocal Synthesis Has Their Say

We couldn’t get Jay-Z on the phone, but Vocal Synthesis was willing to answer some of our questions via email, about the creative vision behind deepfakes and whether any of the pushback is justified.

Conversation lightly edited for clarity and length.

Can you walk us through what you had to do, step-by-step, to make the Jay-Z / Billy Joel deepfake?

First I had to gather/process a training set consisting of as much transcribed audio (i.e. songs + lyrics) of Jay-Z as I could find. This is a somewhat tedious/manual process, as I have to sync up the audio with the lyrics and filter out parts of the song that are unusable for training (e.g. if there are background singers or other sounds overlapping with the vocals). Once the training set is constructed, then I fine-tune the Tacotron 2 model, which takes 6-12 hours usually. [Editor’s note: A training set is the collection of data used to help machine learning models “learn” to perform a task. Tacotron 2 is a technology system that uses machine learning to produce believable speech from text.]. The result of this will be a voice model where I can pass in any text sentence-by-sentence and it will output audio of “Jay-Z” rapping the text.

Can you tell us about your relationship to hip hop?

Actually my knowledge of hip-hop is extremely limited, and is basically zero for anything released after 2010 or so. So far a lot of my videos have featured rap songs because they often provide a funny/entertaining contrast to the real-life persona of the voice being mimicked (e.g. Obama reading “99 Problems”). Also, it turns out that the model I’m using does a surprisingly good job replicating the voices of rappers (the Jay-Z and Notorious B.I.G. models are some of my favorites), so I’ll probably continue to train more of those. The result of this will be a voice model where I can pass in any text sentence-by-sentence and it will output audio of “Jay-Z” rapping the text.

Some artists aren’t laughing. They worry their talent could be used in ways they don’t want.

I can’t speak for anyone else, but at least my videos are intended just for entertainment, and are not meant to be malicious towards the voices I’m mimicking. All of my videos are clearly labeled as speech synthesis, so there is no intention to deceive anyone into thinking that they’re really saying these things.

When you started releasing these deepfakes, did you think anyone would come after you for copyright infringement?

I didn’t expect that YouTube would take down an A.I. impression of someone’s voice for copyright infringement, as that had never happened before (as far as I’m aware). So no, I wasn’t planning for that to happen.

More broadly, how do you make the case that deepfakes should be legal?

I think it would be an extreme overreaction to ban deepfakes entirely at this point. It’s obviously possible for the technology to be used in negative / malicious ways (and the media has been very successful at amplifying those uses). [Editor’s note: see Nancy Pelosi video manipulation and Obama’s PSA as examples.] But there are so many other untapped creative possibilities on the positive side as well. There are always trade-offs whenever a new technology is developed. There are no technologies that can be used exclusively for good; in the hands of bad people, anything can be used maliciously. I believe that there are a lot of potential positive uses of this technology, especially as it gets more advanced. It’s possible I’m wrong, but for now at least I’m not convinced that the potential negative uses will outweigh that.

We’re seeing all sorts of virtual and technology-mediated music experiences. How is this changing how much “true identity” matters in our relationship to the artists we love?

I think it’s possible (perhaps even likely) that, as the technology develops, it will eventually reach a point where synthetic artists can sound as good or better than real ones. I’m not sure what will happen after that, but I think a lot of people would still prefer “real” artists to A.I. ones, even if objectively the quality is similar. Also, there are lots of possibilities for collaboration between the two; maybe the line between “real” and “synthetic” will eventually become somewhat blurred.

Emerging Artists Weigh In

Devin: As artists who are coming into the music business when these kinds of emerging technologies are possible, is what Vocal Synthesis did okay?

Jess (aka Money Maka): No, because my voice and my face are my property whether it’s copyrighted or not. It’s like somebody telling you you don’t own your own body.

Oliver (aka Kuya): We all have unique vocal timbres. For somebody to be able to take that and to use it without permission, without any laws in place to protect our rights, you just have to question the intent behind it.

Amari (aka Amari Augst): If you don’t ask “Can I use your voice?” you don’t have permission. It wasn’t the right way to do it.

D: How much do you guys care about the true identity of the artists you love?

Jess (aka Money Maka): When A.I. starts making music, it’s fake. It’s a whole different genre. At that point, I would just say music is damn near dead because then what are we making it for? I thought the point of making music was to be creative. Everybody has their own spin to their own music. But if robots are making music and they can make any specific genre of music, then what are we even doing at this point?

Marquisse (aka Keese Sama): I was just talking to my homie about that. If A.I. gets in this game, I’m not playing. Or at least I’ma do it just for me. I’m not putting the music out to make money. At that point, it’s just therapy.

Jess (aka Money Maka): What are they gonna do with A.I.? Are they going to be like, “This A.I. bot from Mars?” Like, what? I can’t relate to that. Who is going to relate to a robot?

Marquisse (aka Keese Sama): But that’s how we find our sound in a way: you kind of like mesh different artists together. But it’s still just not natural. That’s my thing about it. It’s not a natural process.

Makai: I think it could be that this becomes popular with young people interested in music if they are working on writing and they want to put it behind somebody’s voice. I feel big artists wouldn’t actually cross that line of using somebody else’s voice, because I feel like in a professional art field, art is very important to the artists who are in there.

Jess (aka Money Maka): I think anytime there’s an opportunity to make money, people are going to make money. So, I definitely don’t think it would be off the table. We’re in a really bad position, guys.

D: What do you think it could mean for our music-making to become even less human than it is already?

Marquisse (aka Keese Sama): We’re probably gonna have to come up with better copyright rules. That’s the only thing we could do outside of banning the technology. And I don’t think they gonna do that.

- I like pizza

- I like calamansi soda

- however my spellcheck does not

- summer is a good time for soda

- i like leo season

Maya: And I think that some of my experiences in the studio, you know, are just there’s like a magical energy that happens that is kind of indescribable. That is just raw human energy. When you’re listening to a song and you hear that raspiness in someone’s voice or you hear the voice crack a little bit or the intonation voice that you sing along with every time. It’s just really scary to think that that could be gone forever. It would just be such a loss for the world.

Makai: Without that magical feeling in the booth, I think there is going to be a lot less of the magical feeling in the listener.